A Research Agenda for Historical and Multilingual Optical Character Recognition

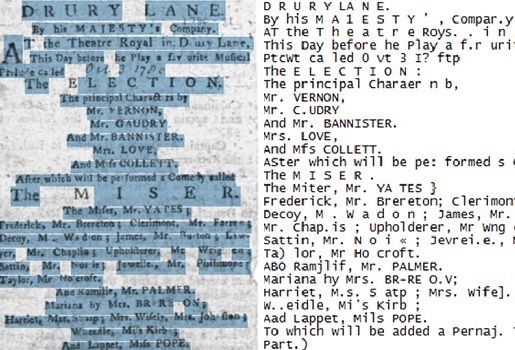

Over the last fifteen years, optical character recognition (OCR) technology has turned billions of images of books, newspapers, and other materials into text searchable at Google Books, the Internet Archive, the Library of Congress, Europeana, and more. The digital abundance now available to students, researchers, and general readers has largely concentrated in the last 100 years, where current systems perform well. Although not perfect for even the most modern documents, currently available OCR systems are much less well suited to older printed texts, whatever their language, and to manuscripts.

In this report, David A. Smith and Ryan Cordell of Northeastern University’s NULab for Texts, Maps, and Networks survey the current state of OCR for historical documents and recommend concrete steps that researchers, implementors, and funders can take to make progress over the next five to ten years. Advances in artificial intelligence for image recognition, natural language processing, and machine learning will drive significant progress. More importantly, sharing goals, techniques, and data among researchers in computer science, in book and manuscript studies, and in library and information sciences will open up exciting new problems and allow the community to allocate resources and measure progress.

This report was written with the generous support of The Andrew W. Mellon Foundation. The conclusions are solely the responsibility of the authors.

“A Research Agenda for Historical and Multilingual OCR” is now available.